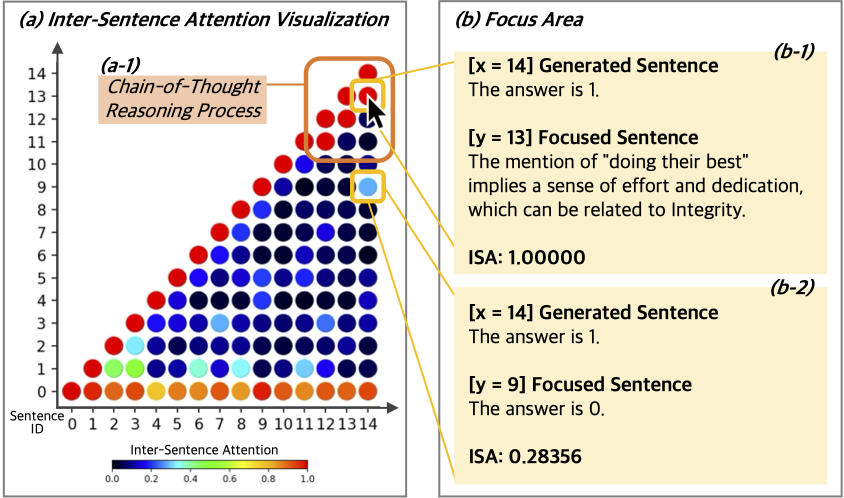

SAVIS (Sentence-level Attention VISualization) is a Python library for visualizing inter-sentence attention in large language models (LLMs). This tool enhances the interpretability of LLMs by providing an intuitive visualization of how attention is distributed across sentences in generated text.

- Inter-sentence attention calculation

- Interactive visualization of attention patterns

- Support for various LLMs through Hugging Face's

transformerslibrary

pip install savisfrom savis import TextGenerator, ISA, ISAVisualization

# Initialize the text generator with your chosen model

generator = TextGenerator("Model name")

# Generate text and get attention data

input_text = "Your input prompt here"

generated_text, attentions, tokenizer, input_ids, outputs = generator.generate_text(input_text)

# Calculate inter-sentence attention

isa = ISA(outputs.sequences[0], attentions, tokenizer)

# Visualize the attention patterns

vis = ISAVisualization(isa.sentence_attention, isa.sentences)

vis.visualize_sentence_attention()-

TextGenerator: Interfaces with the LLM to generate text and extract attention information. -

Attention: Manages the underlying LLM and provides methods for obtaining attention data from the model. -

ISA(Inter-Sentence Attention): Processes raw attention data to compute attention between sentences. -

ISAVisualization: Creates interactive visualizations of the computed inter-sentence attention.

These components work together to provide a comprehensive pipeline from text generation to attention visualization:

-

TextGeneratoruses the LLM to generate text based on input prompts. -

Attentionhandles the interaction with the LLM, extracting detailed attention information. -

ISAtakes the raw attention data and computes meaningful inter-sentence attention scores. -

ISAVisualizationtakes these scores and creates interactive visualizations.

SAVIS is released under the MIT License. See the LICENSE file for more details.