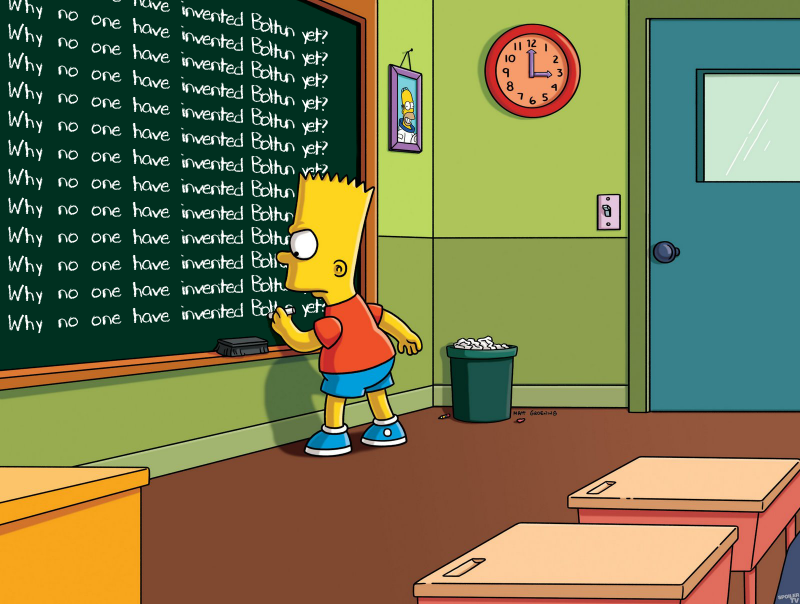

Boltun (aka rus. Болтун)

Generates massive datasets from just a single template.

Command:

> python -m boltun "{{ Hi || Hello }}, [[ 'welcome' | upper? ]][[? ' back' ]] to Boltun [% repeat('!', [1, 3]) %]"

Hi, WELCOME back to Boltun !

Hello, WELCOME back to Boltun !

Hi, welcome back to Boltun !

Hello, welcome back to Boltun !

Hi, WELCOME to Boltun !

Hello, WELCOME to Boltun !

Hi, welcome to Boltun !

Hello, welcome to Boltun !

Hi, WELCOME back to Boltun !!!

Hello, WELCOME back to Boltun !!!

Hi, welcome back to Boltun !!!

Hello, welcome back to Boltun !!!

Hi, WELCOME to Boltun !!!

Hello, WELCOME to Boltun !!!

Hi, welcome to Boltun !!!

Hello, welcome to Boltun !!!

| Branch | CI status |

|---|---|

| master |

|

| develop |

|

About

Boltun is designed to be best alternative for the natural language machine-learning developers and enthusiasts.

It was developed as an alternative for such projects as Chatito and Tracery. Yes, they are almost perfect, especially Tracery, but both of them are written for NodeJS platform. NodeJS is a single threaded language, so that tools are perfect only for small amounts of data. Boltun breaks that bounds of data generation limits.

Getting Started

Installing

Using pip (PyPI repository):

pip install boltunBoltun is also available on Docker Hub:

docker pull meiblorn/boltunFor macOS users, Boltun is available via Homebrew

brew install boltunUsage

pip -m boltun "<your template>"Contributing

You are welcome to contribute ! Just submit your PR and become a part of Boltun community!

Please read contributing.md for details on our code of conduct, and the process for submitting pull requests to us.

Versioning

We use SemVer for versioning. For the versions available, see the tags on this repository.

Authors

- Vadim Fedorenko - Meiblorn -Initial work

See also the list of authors who participated in this project.

License

This project is licensed under the MIT License - see the LICENSE.md file for details

Acknowledgments

- Your questions will appear here. Feel free to ask me.