Extract structured data from remote or local LLM models. Because predictable output is important for serious use of LLMs.

- Query structured data into Pydantic objects, dataclasses or simple types.

- Access remote models from OpenAI, Anthropic, Mistral AI and other providers.

- Use local models like Llama-3, Phi-3, OpenChat or any other GGUF file model.

- Besides structured extraction, Sibila is also a general purpose model access library, to generate plain text or free JSON results, with the same API for local and remote models.

- Model management: download models, manage configuration, quickly switch between models.

No matter how well you craft a prompt begging a model for the format you need, it can always respond something else. Extracting structured data can be a big step into getting predictable behavior from your models.

See What can you do with Sibila?

To extract structured data from a local model:

from sibila import Models

from pydantic import BaseModel

class Info(BaseModel):

event_year: int

first_name: str

last_name: str

age_at_the_time: int

nationality: str

model = Models.create("llamacpp:openchat")

model.extract(Info, "Who was the first man in the moon?")Returns an instance of class Info, created from the model's output:

Info(event_year=1969,

first_name='Neil',

last_name='Armstrong',

age_at_the_time=38,

nationality='American')Or to use a remote model like OpenAI's GPT-4, we would simply replace the model's name:

model = Models.create("openai:gpt-4")

model.extract(Info, "Who was the first man in the moon?")If Pydantic BaseModel objects are too much for your project, Sibila supports similar functionality with Python dataclasses. Also includes asynchronous access to remote models.

The docs explain the main concepts, include examples and an API reference.

Sibila can be installed from PyPI by doing:

pip install --upgrade sibila

See Getting started for more information.

The Examples show what you can do with local or remote models in Sibila: structured data extraction, classification, summarization, etc.

This project is licensed under the MIT License - see the LICENSE file for details.

Sibila wouldn't be be possible without the help of great software and people:

Thank you!

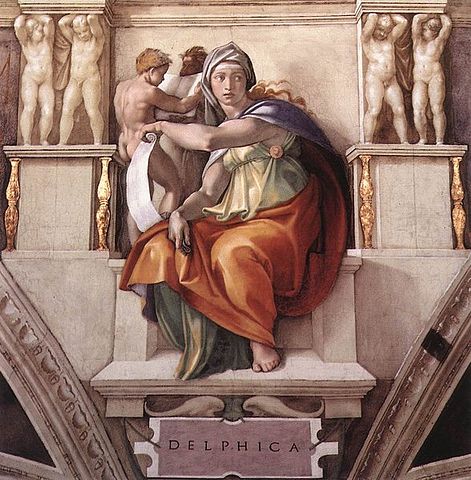

Sibila is the Portuguese word for Sibyl. The Sibyls were wise oracular women in ancient Greece. Their mysterious words puzzled people throughout the centuries, providing insight or prophetic predictions, "uttering things not to be laughed at".

Michelangelo's Delphic Sibyl, in the Sistine Chapel ceiling.